December 8, 2025

We are Unconventional AI.

The coming energy bottleneck for AI requires massive gains in computational efficiency. We’re going to make compute more efficient the same way that F1 racing teams make race cars more aerodynamic: by building a ‘biology-scale’ model of the intelligence layer in a silicon wind tunnel.

Neural networks already have a biological analogue. It’s called the human brain, and it relies on a mere 20 watts. Neurons use their inherent physical properties to build intelligence; we are building silicon circuits that demonstrate similar non-linear dynamics to build a new substrate for intelligence. By building the right isomorphism for intelligence, we’ll unlock efficiency gains far more effectively than simulating these processes with a digital computer.

Why now?

We are AI optimists and aspire to see its capabilities accessible to every individual. The capacity for inquiry and resolution, human coordination, and the marshalling of resources toward ambitious objectives will be unparalleled. AI will be central to human productivity. It’ll be everywhere.

Over the last 50 years, intellectual work began to constitute a larger proportion of human production. Strategic thinking about labor organization and the physical environment significantly amplified resource utilization. The ultimate tool for organizing information was the computer. It’s unsurprising that the technology sector represents one of the largest industries globally.

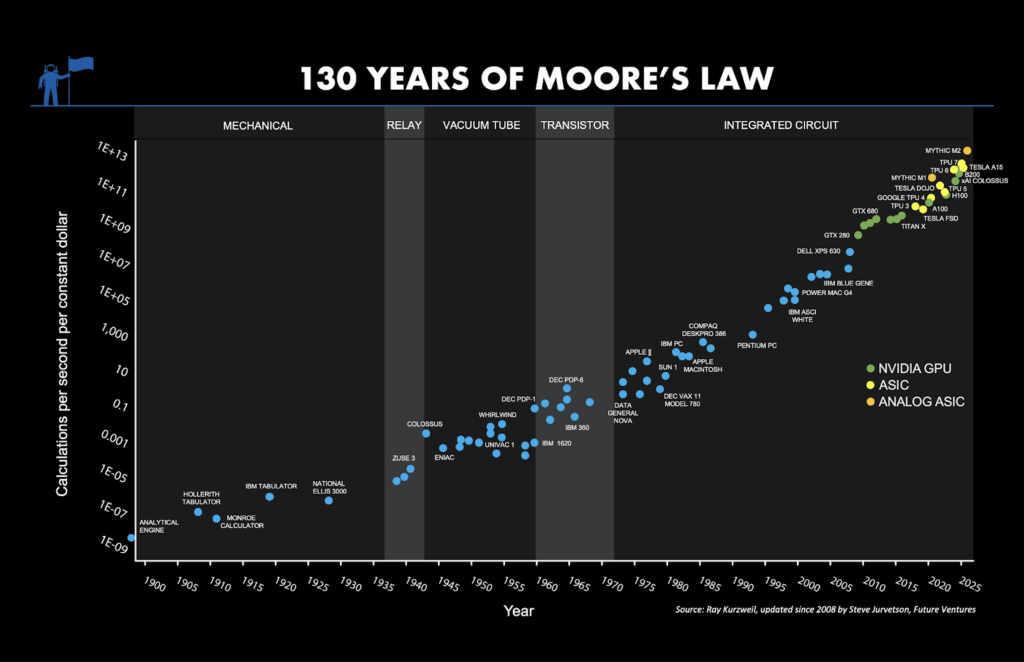

The cost of computation has continuously declined over the past half-century (see 130 years of Moore’s law below).

Each price reduction has expanded applicability (as illustrated by Jevon’s paradox). Computers were initially used only for high-value scientific or military endeavors. These applications subsequently gave way to business tools, gaming, and entertainment. Currently, computers serve as the foundation for AI, which is, in turn, generating unprecedented demand for computational power.

AI is fundamentally distinct from other forms of computation. It is redefining productivity and moving computers beyond mere organizational tools. The breadth of AI’s applicability suggests that demand is projected to escalate at unprecedented rates. Should these projections materialize, computation will become constrained by global energy supply within the next 3–4 years.

What is required to scale AI to meet this burgeoning demand? We must engineer a more efficient computational substrate specifically for AI. That’s where Unconventional AI comes in.

Biology-scale energy efficiency is our goal. A neural network operates as a stochastic machine. When implemented on conventional computers, neural networks run on deterministic abstractions that ultimately execute on analog circuitry tuned to emulate digital behavior. This results in many inefficiencies. Instead, can we provide a software interface to the inherent physics of the silicon? In essence, we will run the neural network on the physics directly rather than simulating some physical system. This approach will enable capabilities far surpassing current models while consuming a mere fraction of the energy. The question we ask is, what is the right isomorphism for intelligence?

To help us achieve this goal, we have raised $475 million in seed funding and the company is valued at $4.5 billion. The round was led by Lightspeed and Andreessen Horowitz, with participation from Sequoia, Lux Capital, DCVC, Future Ventures, Jeff Bezos, and many other amazing investing partners. Naveen Rao, cofounder and CEO, will personally be investing $10 million alongside others.

Be Unconventional

Developing this novel machine will be a complex undertaking, necessitating truly unconventional thinking. We require individuals capable of critically challenging assumptions and reasoning from first principles. This endeavor is as much an algorithmic and software challenge as one of novel hardware design. Extreme codesign is paramount. We invite qualified candidates to explore our open roles here and join us in this Unconventional pursuit.

-MeeLan, Sara, Mike, and Naveen